Bottom line? Don't use it! At least don't use it for a SQL cluster directly.

Recently I stumbled on a configuration using S2D (Storage Spaces Direct) for a windows cluster that had a SQL Server cluster built on top.

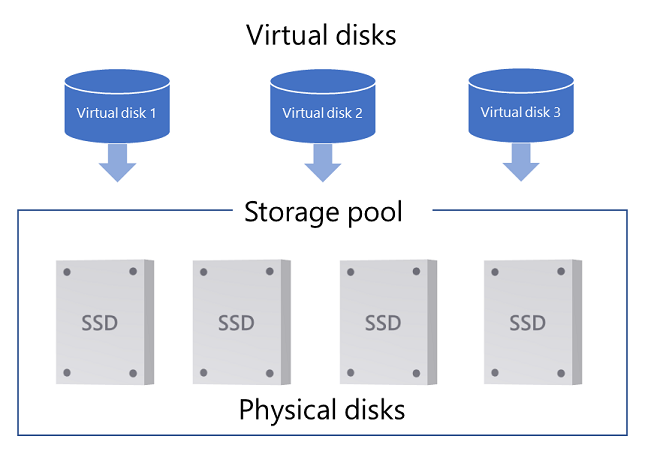

It's my understanding that the primary use of S2D is to create a local SAN from many local attached drives to a server to create faster drives for a VM host server if that is what you are designing. (see above image) This is not really something you want to do for a windows cluster running SQL Server and I wouldn't advise it for a SQL Server VM in Azure or AWS either.

The way this was set up was two physical drives on one server and two drives on another server, neither of the drives were shared. The S2D was created cross-server, on those four drives and set up as CSVs. Once one of the nodes went down, the entire cluster went offline.

Because of that, we started to add new shared drives to both VM nodes to migrate away from S2D. When we did this, unfortunately, we learned the hard way that S2D grabs them right away, adds them to the already existing storage pool.

The first thing we had to do was stop the auto-add. Run this in PowerShell on the active node.

Get-StorageSubSystem | Set-StorageHealthSetting -Name "System.Storage.PhysicalDisk.AutoPool.Enabled" -Value False

Now we can create a new shared disk and attach it to the active node first. After it's added, S2D grabs it and adds its own disk ID, starting at 1000. Local disks start at 1.

Using PowerShell, get the disk ID's

Get-PhysicalDisk | select deviceid, size

PS C:\> Get-PhysicalDisk | select deviceid, size

deviceid size

-------- ----

0 136367308800

1 68719476736

2010 8796093022208

5 8796093022208

1003 1098437885952

2002 1098437885952

2003 1098437885952

1002 1098437885952

4 8796093022208

2011 8796093022208

2008 8796093022208

PS C:\> $disk = Get-PhysicalDisk | where deviceid -eq 2008

PS C:\> Set-ClusterS2DDisk -CanBeClaimed $false -PhysicalDiskIds $disk.UniqueId

PS C:\> $disk = Get-PhysicalDisk | where deviceid -eq 2010

PS C:\> Set-ClusterS2DDisk -CanBeClaimed $false -PhysicalDiskIds $disk.UniqueId

PS C:\> $disk = Get-PhysicalDisk | where deviceid -eq 2011

PS C:\> Set-ClusterS2DDisk -CanBeClaimed $false -PhysicalDiskIds $disk.UniqueId

PS C:\> Get-PhysicalDisk | select deviceid, size

deviceid size

-------- ----

0 136367308800

1 68719476736

1006 8796093022208

5 8796093022208

1003 1098437885952

2002 1098437885952

2003 1098437885952

1002 1098437885952

4 8796093022208

1009 8796093022208

1010 8796093022208PS C:\> $disk = Get-PhysicalDisk | where deviceid -eq 1006

PS C:\> Set-ClusterS2DDisk -CanBeClaimed $false -PhysicalDiskIds $disk.UniqueId

PS C:\> $disk = Get-PhysicalDisk | where deviceid -eq 1009

PS C:\> Set-ClusterS2DDisk -CanBeClaimed $false -PhysicalDiskIds $disk.UniqueId

PS C:\> $disk = Get-PhysicalDisk | where deviceid -eq 1010

PS C:\> Set-ClusterS2DDisk -CanBeClaimed $false -PhysicalDiskIds $disk.UniqueIdPS C:\> Get-PhysicalDisk | select deviceid, size

deviceid size

-------- ----

5 8796093022208

3 8796093022208

0 136367308800

1 68719476736

7 8796093022208

2 8796093022208

4 8796093022208

6 8796093022208Add the disks to the cluster.

Disks now appear as 'Available Storage'.

Turn on maintenance mode

Now open Disk Management and format the new drives and drive letters. Format the disk of course using Microsoft's Best Practice for data or logs.

Turn off maintenance mode for the newly formatted drives.

Turn on maintenance mode

Now open Disk Management and format the new drives and drive letters. Format the disk of course using Microsoft's Best Practice for data or logs.

Drives should show formatted and have an assigned drive letter.

Now you can add storage to the SQL Server cluster role. Right mouse click on the SQL Cluster Role.

Comments

Post a Comment