Recently I encountered a Microsoft SQL Server with relatively high and very consistent CPU usage with few peaks and valleys.

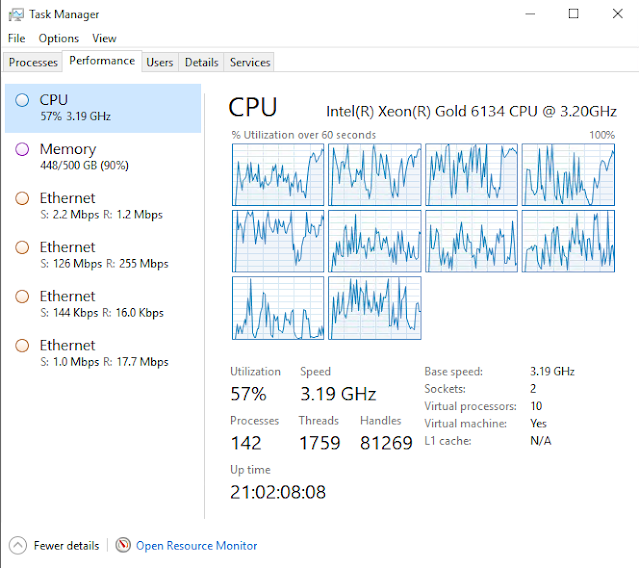

When checking the server CPU configuration, I noticed that the server had 10 virtual CPUs.

When I checked the SQL Server configuration, the server was only using a max of 4 for parallel processing.

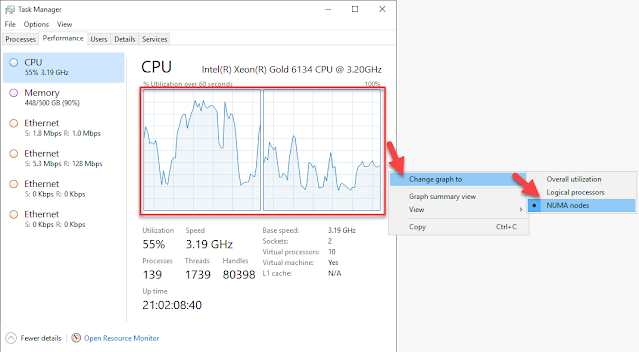

Next, I looked to see if the server had NUMA nodes and how many processors were in each NUMA node.

To find out how many NUMA nodes your server or VM might have, open up task manager, go to the CPU tab, right mouse click, select 'change graph to', then select 'NUMA nodes'.

The configuring of SQL Server's Max Degree of Parallelism is very important to keep the CPU and memory usage to one side of a NUMA node for best performance. If you use more than the CPU available on one side of the NUMA node, you will have increased latency. In this instance, this server had 2 NUMA nodes.

This server was configured to only use 4 of the 5 CPUs on each side of the NUMA node. Therefore, it left 1 CPU on the table and did not use it for parallel processes.

I changed the setting from 4 to 5.

Immediately you could see the overall server CPU usage drop.

You can see here that the transactions now have peaks and valleys.

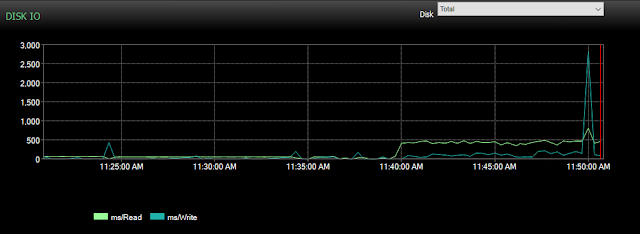

I also noticed another change to Disk IO. The server was able to start working, which required more IO.

The server was actually able to do more work and pull more data from the SAN. Here is what that looked like.

In conclusion, does Max Degree of Parallelism and NUMA node matter? Yes!

Comments

Post a Comment